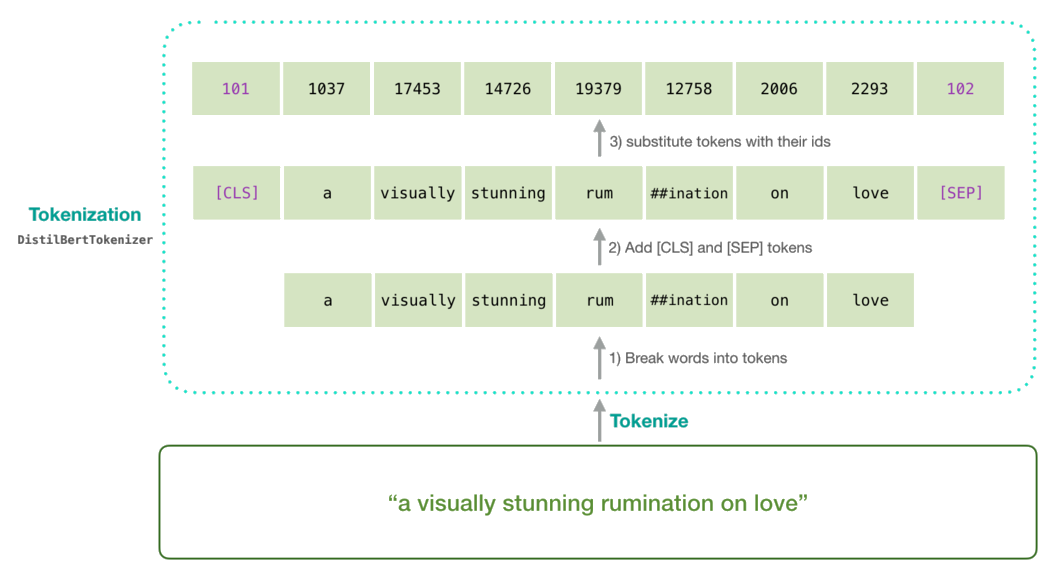

Hugging Face Transformers: Fine-tuning DistilBERT for Binary Classification Tasks | Towards Data Science

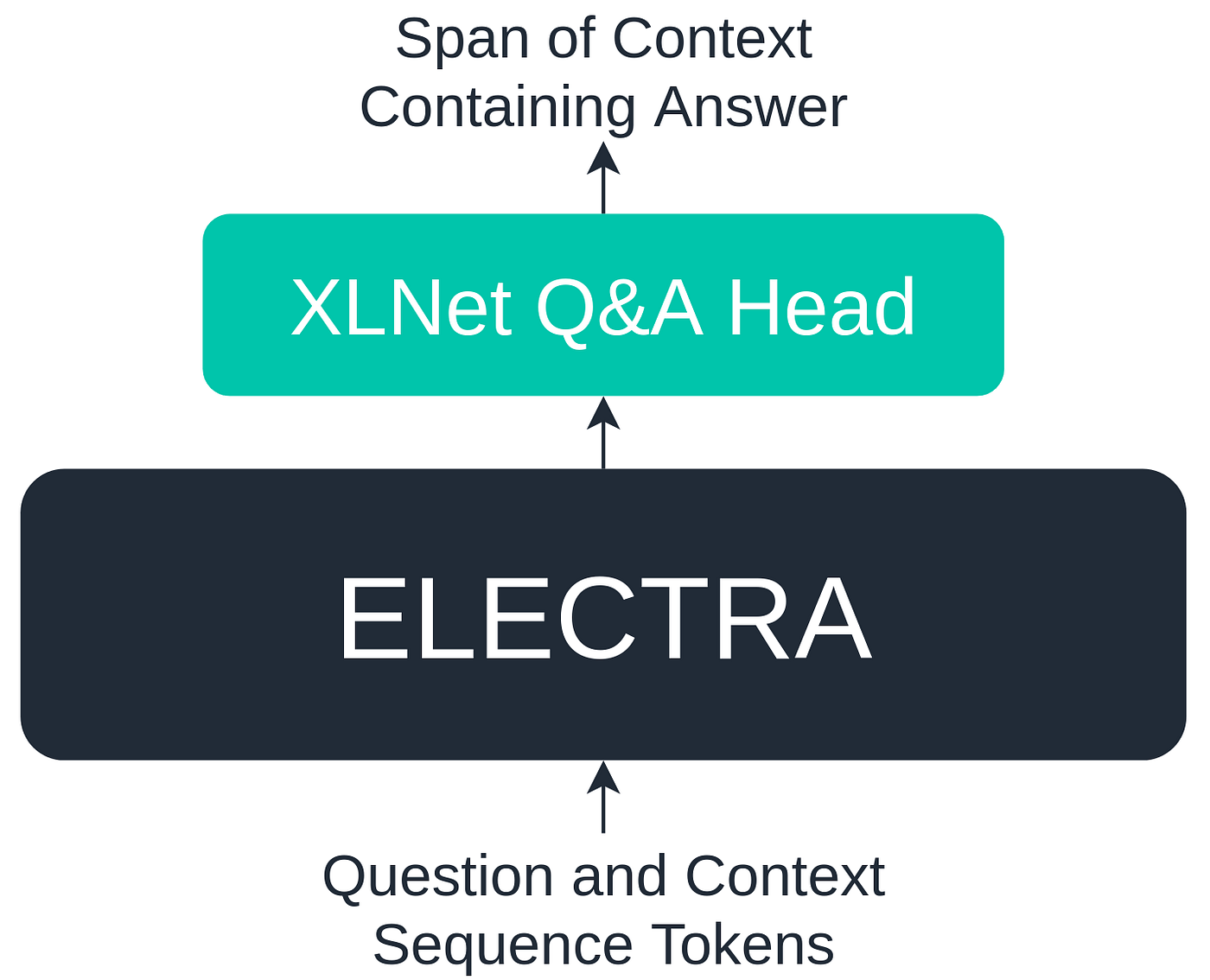

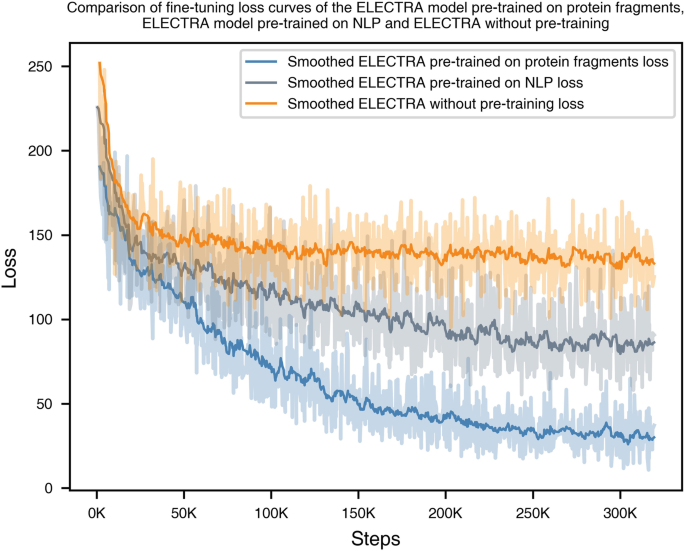

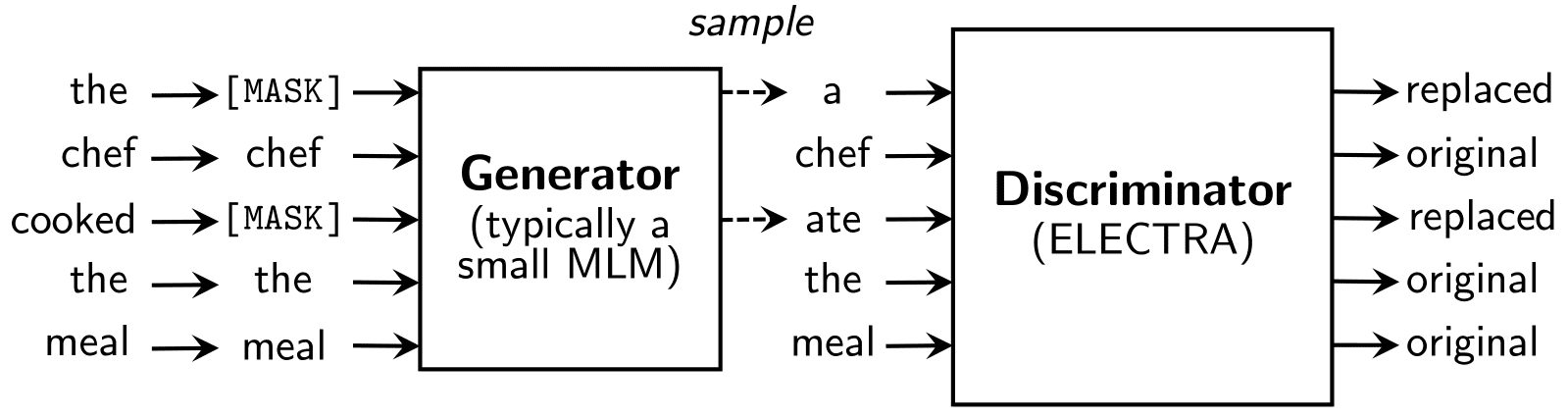

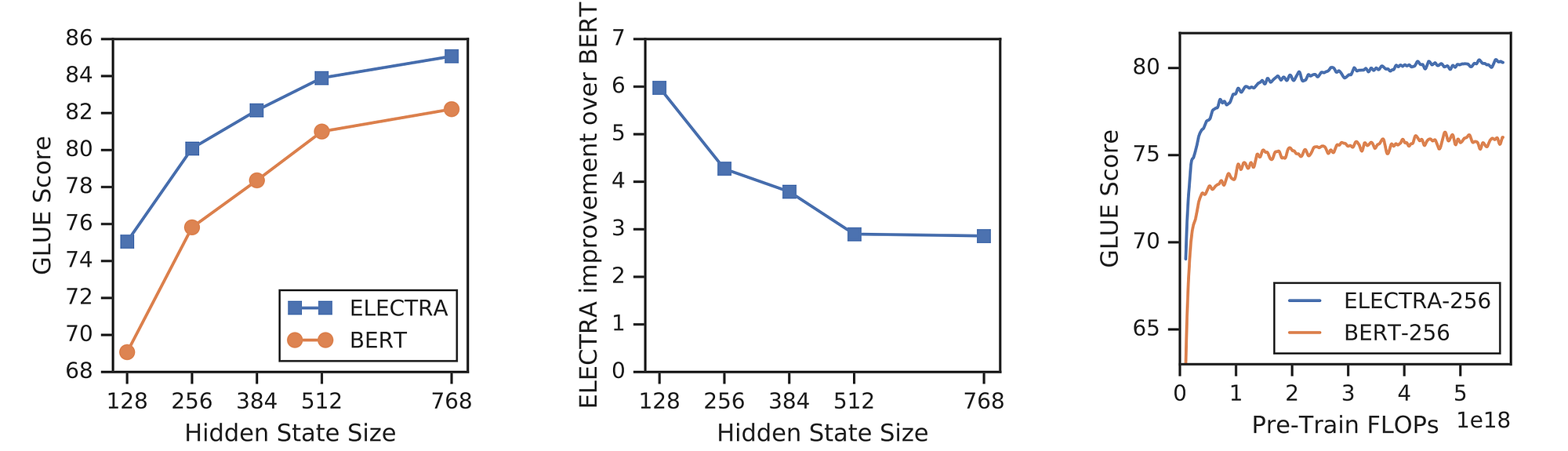

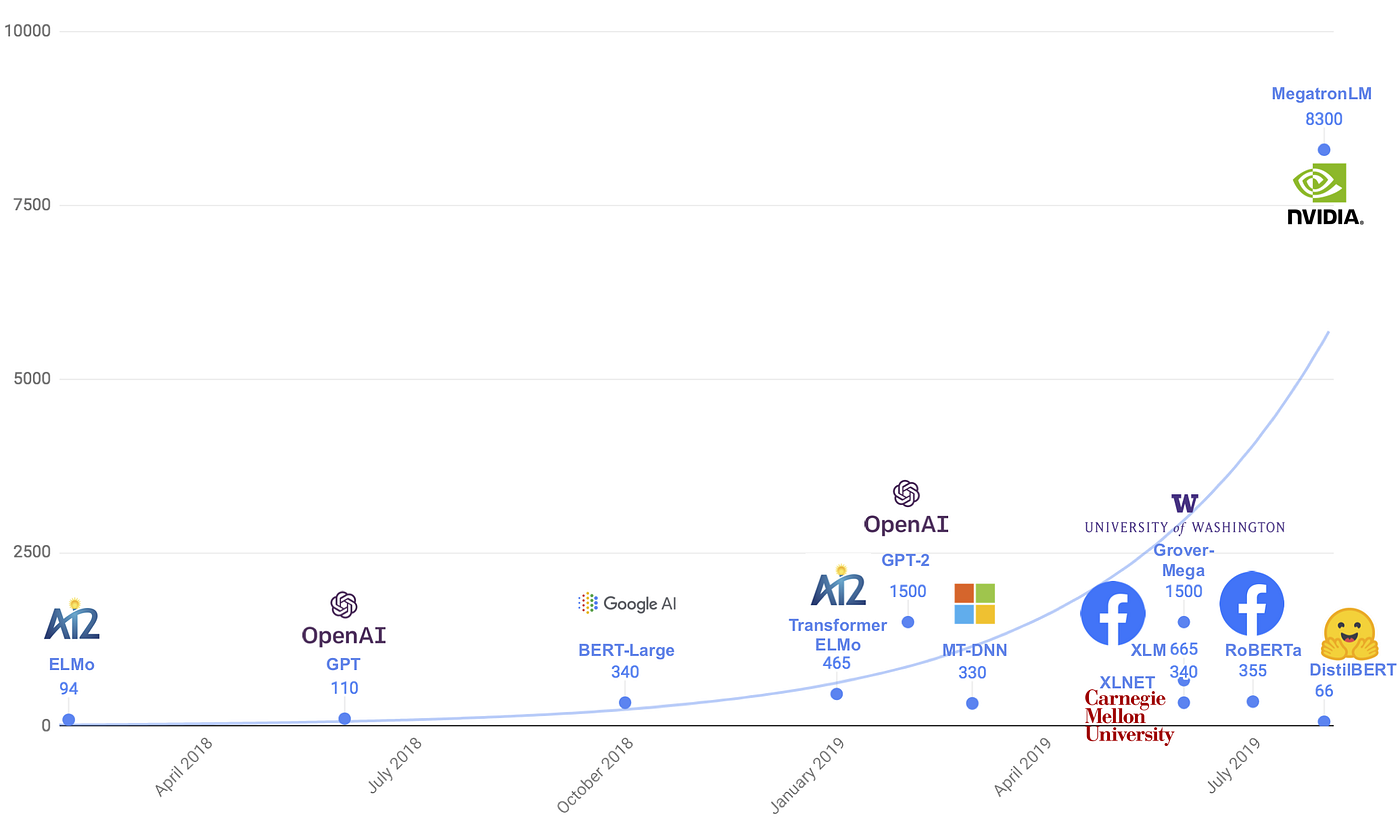

Understanding ELECTRA and Training an ELECTRA Language Model | by Thilina Rajapakse | Towards Data Science

Understanding ELECTRA and Training an ELECTRA Language Model | by Thilina Rajapakse | Towards Data Science

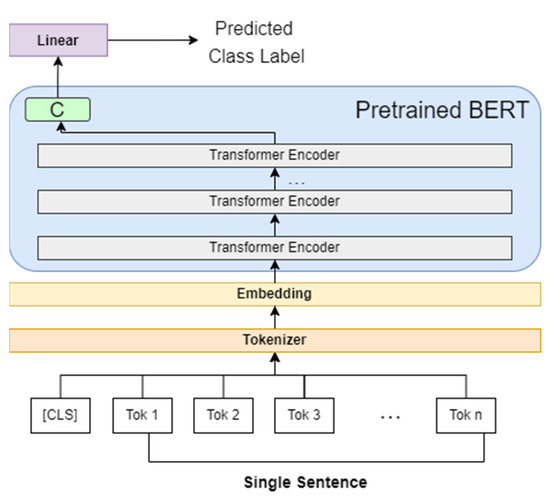

Hugging Face Transformers: Fine-tuning DistilBERT for Binary Classification Tasks | Towards Data Science

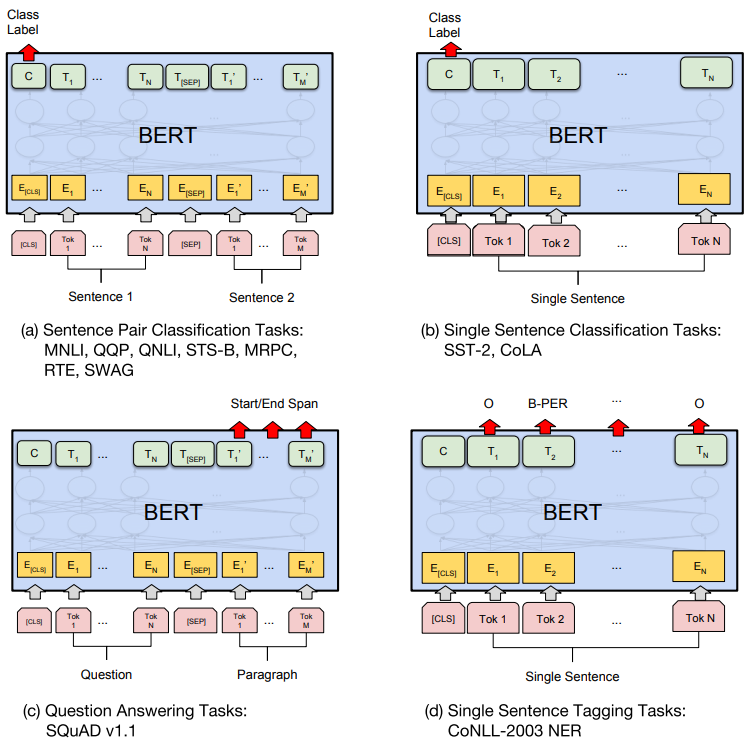

Illustration of KG-ELECTRA fine-tuning for triples classification task... | Download Scientific Diagram